Standard Deviation is defined as an absolute measure of dispersion of a series. It clarifies the standard amount of variation on either side of the mean. It is often misconstrued with the standard error, as it is based on standard deviation and sample size.

Standard Deviation is defined as an absolute measure of dispersion of a series. It clarifies the standard amount of variation on either side of the mean. It is often misconstrued with the standard error, as it is based on standard deviation and sample size.

Standard Error is used to measure the statistical accuracy of an estimate. It is primarily used in the process of testing hypothesis and estimating interval.

These are two important concepts of statistics, which are widely used in the field of research. The difference between standard deviation and standard error is based on the difference between the description of data and its inference.

Content: Standard Deviation Vs Standard Error

Comparison Chart

| Basis for Comparison | Standard Deviation | Standard Error |

|---|---|---|

| Meaning | Standard Deviation implies a measure of dispersion of the set of values from their mean. | Standard Error connotes the measure of statistical exactness of an estimate. |

| Statistic | Descriptive | Inferential |

| Measures | How much observations vary from each other. | How precise the sample mean to the true population mean. |

| Distribution | Distribution of observation concerning normal curve. | Distribution of an estimate concerning normal curve. |

| Formula | Square root of variance | Standard deviation divided by square root of sample size. |

| Increase in sample size | Gives a more specific measure of standard deviation. | Decreases standard error. |

Definition of Standard Deviation

Standard Deviation, is a measure of the spread of a series or the distance from the standard. In 1893, Karl Pearson coined the notion of standard deviation, which is undoubtedly most used measure, in research studies.

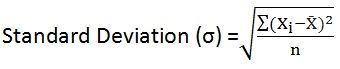

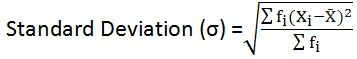

It is the square root of the average of squares of deviations from their mean. In other words, for a given data set, the standard deviation is the root-mean-square-deviation, from arithmetic mean. For the whole population, it is indicated by Greek letter ‘sigma (σ)’, and for a sample, it is represented by Latin letter ‘s’.

Standard Deviation is a measure that quantifies the degree of dispersion of the set of observations. The farther the data points from the mean value, the greater is the deviation within the data set, representing that data points are scattered over a wider range of values and vice versa.

Definition of Standard Error

You might have observed that different samples, with identical size, drawn from the same population, will give diverse values of statistic under consideration, i.e. sample mean. Standard Error (SE) provides, the standard deviation in different values of the sample mean. It is used to make a comparison between sample means across the populations.

In short, standard error of a statistic is nothing but the standard deviation of its sampling distribution. It has a great role to play the testing of statistical hypothesis and interval estimation. It gives an idea of the exactness and reliability of the estimate. The smaller the standard error, the greater is the uniformity of the theoretical distribution and vice versa.

- Formula: Standard Error for sample mean = σ/√n

Where, σ is population standard deviation

Key Differences Between Standard Deviation and Standard Error

The points stated below are substantial so far as the difference between standard deviation is concerned:

- Standard Deviation is the measure which assesses the amount of variation in the set of observations. Standard Error gauges the accuracy of an estimate, i.e. it is the measure of variability of the theoretical distribution of a statistic.

- Standard Deviation is a descriptive statistic, whereas the standard error is an inferential statistic.

- Standard Deviation measures how far the individual values are from the mean value. On the contrary, how close the sample mean is to the population mean.

- Standard Deviation is the distribution of observations with reference to the normal curve. As against this, the standard error is the distribution of an estimate with reference to the normal curve.

- Standard Deviation is defined as the square root of the variance. Conversely, the standard error is described as the standard deviation divided by square root of sample size.

- When the sample size is raised, it provides a more particular measure of standard deviation. Unlike, standard error when the sample size is increased, the standard error tends to decrease.

Conclusion

By and large, the standard deviation is considered as one of the best measures of dispersion, which gauges the dispersion of values from the central value. On the other hand, the standard error is mainly used to check the reliability and accuracy of the estimate and so, the smaller the error, the greater is its reliability and accuracy.

Utsav Chaware says

Hii there

You are doing pretty well..I went through your series of post previously and you are phenomenally informative with your words and articulation.

Go on

You rock pal

Jeff Chensida says

Your explanation is very helpful to me. Thank you.